Automatic bolus tracking in abdominal CT scans with convolutional neural networks

Introduction

With advances in imaging technology and the exponential growth in the volume of medical imaging data, the current computed tomography (CT) workflow involves performing complex scanning protocols and processing requirements on a regular basis (1). Manual procedures are not only time-consuming but also subject to inter-operator variances, creating potential for diagnostic inaccuracies (2). In the new era of radiomics and deep learning, convolutional neural networks (CNNs) can serve as a highly effective tool for automating classification and detection tasks for medical imaging data (3,4).

Accurate enhancement phase determination is crucial for confident lesion characterization in contrast-enhanced CT, such as for assessing hypervascular hepatocellular carcinoma (HCC) during the arterial phase (5). However, patient-specific variations such as heart rate, body weight, and circulation impairments can influence enhancement timing, introducing uncertainties and compromising confidence in diagnostic biomarkers (6,7). Bolus tracking can help individualize time delays between contrast injection and diagnostic scan initiations by tracking the enhancement of radio-opaque contrast media (typically iodine) at a predefined operator-selected region (2,8). The current bolus tracking workflow involves manual selection of a position along the patient axis for the locator scan on a two-dimensional (2D) CT topogram (also known as surview or scout scans), followed by manual selection of a region of interest (ROI) within the aorta on locator scans for tracking enhancement levels. Following the injection of intravenous contrast agent, low-dose tracker scans are executed continuously, e.g., every second, to monitor the increase in Hounsfield units (HU) within the selected ROI. Once a predefined HU-threshold is reached within the ROI, diagnostic scans are automatically initiated after predetermined time intervals that were optimized for the specific exam protocol or clinical indication (2,8).

Accurate bolus placement is crucial for optimal enhancement and phase determination in the bolus-tracking procedure (9). However, the manual procedure is subject to errors and intra- and inter-operator variance, particularly during the locator scan positioning step. To standardize the diagnosis process both between different patient populations and between different time-points during evaluations of a single patient (10), e.g., for treatment response assessment (11), several advancements in contrast-enhanced CT capabilities are required, including accurate contrast media quantification (12,13), normalization of iodine perfusion ratios (14), and more advanced fully automated bolus tracking techniques such as the one presented in this work.

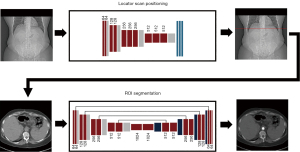

The objective of the current study is to fully automate the bolus tracking procedure in contrast-enhanced abdominal CT exams using CNNs. Our method consists of two sequential steps: (I) automatic locator scan positioning on topograms, and (II) automatic ROI positioning within the aorta on locator scans. By reducing CT operator-related decisions, our method enables improved standardization and diagnostic accuracy, as well as greater efficiency in the diagnostic review process and clinical workflow. We present the following article in accordance with the TRIPOD reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-22-686/rc).

Methods

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the institutional review board of the University of Pennsylvania and individual consent for this retrospective analysis was waived. In this study, we apply machine learning approaches using clinically available retrospective datasets to automate the bolus tracking procedure. Our method consists of two consecutive steps, each of which was developed and tested independently: (I) automatic determination of locator scan position on topograms, and (II) automatic ROI positioning within the aorta on locator scans. A schematic of this two-step process is shown in Figure 1.

While the segmentation task has been extensively addressed in the literature among the medical imaging community (15), methods on the automatic selection of a specific slice in an acquired CT topogram are extremely limited (16). In recent years, researchers have begun to address this issue by proposing deep learning methods for the automatic detection of the third lumbar vertebra (L3) for body composition analysis. Such methods can be classified into the regression paradigm, which involves directly estimating the slice position given the entire CT scan in one-dimensional (1D) output (16) or 2D confidence maps (17), and the binary classification paradigm, which involves determining whether the target slice is present for each slice (18). We retained the regression paradigm for our locator scan positioning task as it is more lightweight compared to binary classification methods which require extensive data annotation (i.e., classification of each slice).

Dataset collection and preparation

Retrospective topograms and locator scan images used in this study were collected from the Picture Archiving and Communication System (PACS) of our institute under a dedicated Institutional Review Board (IRB). Over three hundred CT examinations were identified and collected. Out of these cases, six were excluded due to locator scan positions which exceeded the length of the topogram, for a total of 298 samples. All samples in which the locator scan position did not exceed the topogram were retained for network training.

Collected CT exams showed a high level of patient heterogeneity (sex, age, cancer pathology, and medical state) and consisted of four different scanner models, while topograms consisted of two different pixel spacing values (Table 1). The locator scan positioning network was trained with coronal scans only due to a limited number of exams which included topograms in the sagittal view. The ROI segmentation network was trained with axial scans only. Collected coronal view topograms showed minimal imaging artifacts, though many included implants, chemo-ports, or ECG wires, which served as sources of variability. Fourteen of 298 axial scans showed significant imaging artifacts, most of which are due to high noise from insufficient dose levels or from objects outside the field of view, resulting in beam hardening artifacts, photon starvation artifacts, and truncated data artifacts. No cases were excluded due to imaging artifacts.

Table 1

| Characteristic | Samples | % |

|---|---|---|

| Sex | ||

| Male | 172 | 58 |

| Female | 126 | 42 |

| Age (years) | ||

| 20–39 | 39 | 13 |

| 40–59 | 99 | 33 |

| 60–79 | 148 | 50 |

| 80–100 | 12 | 4 |

| Initial pixel spacing (mm/px) | ||

| 1 | 194 | 65 |

| 2 | 104 | 34 |

| Manufacturer’s model name | ||

| SOMATOM Force | 137 | 46 |

| SOMATOM Definition Adaptive Scanning (AS) + | 110 | 37 |

| SOMATOM Definition AS | 32 | 11 |

| SOMATOM Definition Flash | 14 | 5 |

| Other | 5 | 2 |

Locator scan positions and ROI locations for each CT scan were located by a single operator. A total of 27 CT operators were recorded in the collected CT dataset. Ground-truth annotations of locator scan positions and ROI locations were extracted from the respective Digital Imaging and Communications in Medicine (DICOM) attributes, which record the respective geometric locations originally selected by CT technologists. Locator scan positions were extracted from the Image Position Patient attribute (0020, 0032) of the coronal scan and the Slice Location attribute (0020, 1041) of the locator scan; ROI location was extracted from the Overlay Data attribute (6000, 3000). To ensure consistent input to the network, all coronal view topograms were resized to a 224×224 matrix size with a pixel spacing of 2.28 mm. For both locator scan positioning and ROI positioning algorithms, the 2D matrix was duplicated in each color channel in order to match the required 3-channel input of the pre-trained models.

Locator scan positioning

In this study, the task of locator scan positioning is formulated as a regression problem, with the goal of predicting the slice position location along the patient axis (z-position) which corresponds to the anatomical location used for bolus tracking in these examinations. The network architecture was modified by stacking a randomly initialized fully connected layer with trainable parameters to the convolutional base to output the locator scan position as an integer corresponding to the row number (in image pixels). While the optimal locator scan position for bolus tracking is not well documented in the literature, for this work we adopted the general placement of 1 cm below the diaphragm proposed by Adibi et al. (2) as a proof-of-concept for this technique. Our algorithm can be easily retrained to target other positions or anatomies for the locator scan given the corresponding annotations.

Since modern machine learning algorithms require vast amounts of training data to achieve high accuracy, we adopted a transfer learning approach to improve sample efficiency (19). In this framework, the weights of the network layers are initialized with the weights of a pre-trained CNN and frozen or fine-tuned to fit the target application. The networks were pre-trained on ImageNet, a classification database which contains over 14 million non-medical images separated into 1,000 categories (20). The network was trained on collected data, with 2D topograms as input and locator scan row number as targets.

To study the algorithm’s generalizability, 10% of the 298 collected cases were randomly selected as the external validation (test) dataset (n=30). Remaining data was randomly divided into training and validation sets in a ratio of 8:2 for development of our algorithm. The mean squared error loss function was selected for training and the weights were optimized using Adam optimizer. We compared the performance of VGG16 (21), VGG19 (21), and ResNet50 (22) architectures to account for the effect of the number of trainable parameters and network depth on feature extraction (Table 2). We also investigated the effect of introducing additional fully connected layers on algorithmic performance.

Table 2

| Network | Layers | Trainable parameters |

|---|---|---|

| VGG16 | 16 | 14,739,777 |

| VGG19 | 19 | 20,049,473 |

| ResNet50 | 50 | 23,634,945 |

The architectures varied in network depth and number of trainable parameters.

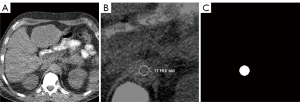

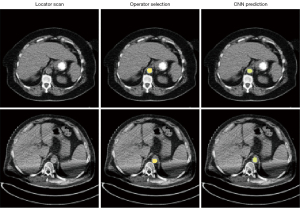

ROI segmentation

The task of ROI positioning was formulated as a segmentation problem, with the goal of localizing the aorta on locator scans. Input data consisted of 2D matrices of locator scans, with respective 2D binary masks indicating aorta position manually selected by the original CT technologist that serve as ground-truth segmentation targets. The binary masks were generated by selecting for and filling in the circle on the original overlay data extracted from DICOM data (Figure 2).

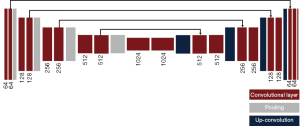

A UNet was used to perform the segmentation task (Figure 3). The UNet architecture consists of a multiple down-sampling and up-sampling blocks, each with a set of convolutional units, where each unit consists of a sequence convolution, batch normalization, and non-linear activation (23). The loss function was selected as the binary cross-entropy and the model was optimized using Adam optimizer. Since the network generates 2D matrices as output, no further adjustments were made to the original architecture. Network predictions consisted of 2D binary masks displaying ROI location predictions. To quantify network error, center of mass was determined by:

Where m is the pixel value at each coordinate.

Error was defined as the Euclidean distance between the of the expected and predicted ROI in millimeters.

Results

Automatic locator scan positioning

Performance of the locator scan positioning model was investigated by computing the prediction error on the external test dataset (n=30). The prediction error for a single CT scan is computed as the absolute difference between the network prediction and target in millimeters. We report the mean (µ) and the standard deviation of the prediction error (σ) over the entire test set (Table 3).

Table 3

| Network | Learning rate | Batch size | Epochs | Error (mm) | |

|---|---|---|---|---|---|

| Original selection | Expert-user selection | ||||

| VGG16 | 0.001 | 10 | 500 | 25.26±20.00 | 25.5±20.99 |

| VGG19 | 0.001 | 10 | 500 | 30.13±34.48 | 25.5±21.00 |

| ResNet50 | 0.001 | 10 | 500 | 15.97±13.01 | 13.16±19.79 |

Performance was evaluated by computing the prediction error on a test dataset consisting of 30 unseen topograms. Data are presented as mean ± standard deviation.

To account for the possible impact of intra-operator variance, we also trained and tested the models using expert-level labels of a single user in addition to those originally extracted from the DICOM attributes. The expert-level ground-truth labels were retrospectively selected by Penn Medicine’s Lead CT Technologist with the aid of a dedicated graphical user interface (GUI) that we implemented, where the annotator clicked on the location of the locator scan for each CT topogram and the selected position along the patient axis was recorded. No reference landmarks, such as the original locator scan position, were provided to the annotator.

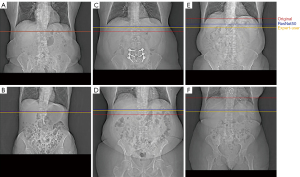

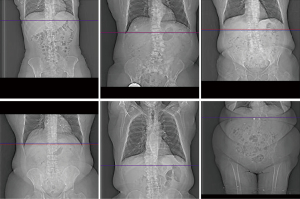

Of the three models, the ResNet50-based model yielded the smallest predictive error (13.16±19.79 mm). We found that adding additional fully connected layers at the end of the network did not improve algorithmic performance. Importantly, models that were trained on a single expert-level operator labels yielded a smaller or similar predictive error, verifying inter-operator variance as a significant source of error. An example of the inter-operator variance of the original selections can be clearly observed when compared to the respective selections made by a single expert-level operator (Figure 4). The network predictions provide improved positional consistency compared to the high degree of variance in manual slice positionings performed by CT operators, particularly when examined relative to distinct anatomical structures such as the diaphragm.

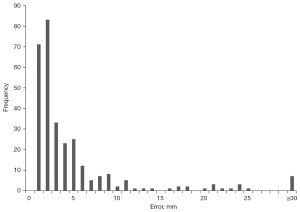

To better understand the high standard deviation in our model’s predictive error, we computed the error distribution of all training and test samples (Figure 5). Good agreement is observed across most samples, with 90% having errors below a single centimeter. Examples of coronal topograms that resulted in good agreement with the locations selected by the expert-level user are shown in Figure 6. While the optimal locator scan position for bolus tracking is not well documented in the literature, Adibi et al. have proposed a general placement of 1 cm below the diaphragm (2), consistent with our CNN predictions.

We subsequently analyzed samples with large error (here defined as those with predictive error ≥30 mm). The network showed a large error on a total of 7 out of 298 samples (Figure S1). These errors can be classified into two categories: prediction errors and labelling errors. For the first category, the network predictions were approximately a single vertebra away from that selected location by the operator (Figure S1A-S1C). Labelling errors were observed in four samples in which there was a clear inconsistency in the operator’s ground-truth labeling (Figure S1D-1G). However, the network was able to accurately predict locator scan positions in these cases, even in the presence of artifacts and implants. Excluding the four samples containing labelling errors significantly reduced the average predictive error of our model, for a final average predictive error of 9.76±6.78 mm (Table 4).

Table 4

| Data | Error (mm) |

|---|---|

| Original operator selections | 27.81±25.63 |

| Resnet50, expert-user selections | 13.16±19.79 |

| Resnet50, expert-user selections (labelling errors excluded) | 9.76±6.78 |

Error is computed as the absolute difference between the network prediction and target (single expert-level selections) in millimeters. Data are presented as mean ± standard deviation.

Automatic ROI positioning

Performance of the segmentation network was investigated by computing the Euclidean distance between the center of mass of the expected and predicted ROI in millimeters. The raw output of the ROI positioning network is a 2D confidence map with each pixel value in the range of 0 to 1. To generate a binary predicted mask used for evaluation, we applied a binary threshold of value of 0.9999999, under which each pixel of the mask is set to either 0 or 1 depending on its intensity value relative to the threshold (Figure S2).

Overall, our ROI segmentation network showed no significant difference with the original manual annotations and achieved a sub-millimeter error on a test dataset (Table 5). Furthermore, the network accurately predicted ROI position in the presence of imaging artifacts, validating the network’s robustness (Figure 7).

Table 5

| Mean | SD | Median | Max | |

|---|---|---|---|---|

| Error (mm) | 0.99 | 0.66 | 0.80 | 2.74 |

Error was obtained by computing the Euclidean distance between the center of mass of the expected and predicted ROI on a test dataset. ROI, region of interest; SD, standard deviation.

Discussion

The current computed tomography (CT) workflow involves performing complex scanning protocols and processing requirements on a regular basis (1). Computer-assisted bolus tracking can optimize time delays between contrast injection and diagnostic scan initiation in contrast-enhanced CT compared to fixed delay techniques; however, the procedure is both time-consuming and subject to intra- and inter-operator variances, which may affect enhancement levels in diagnostic scans (9). In the present study, we developed machine learning algorithms to automate and standardize the (I) locator scan positioning and (II) aorta positioning tasks of the bolus tracking procedure. The task of locator scan positioning is formulated as a regression problem, where the limited amount of annotated data was circumvented using transfer learning, while the task of ROI segmentation is formulated as a segmentation problem. The CNNs were trained using retrospective clinical datasets (n=298) and evaluated on an unseen test dataset (n=30).

Our locator scan positioning network offered greater positional consistency compared to manual slice positionings, verifying intra- and inter-operator variance as major sources of error in the bolus tracking procedure. When trained using single-user ground-truth labels, the locator scan positioning network achieved a 9.76±6.78 mm error on a test dataset. Our ROI segmentation network showed no significant difference with manual annotations and achieved a sub-millimeter error between the predicted and expected ROI (0.99±0.66 mm). This automated pipeline offers improved standardization and accuracy in the bolus tracking procedure compared to manual methods, highlighting the potential for greater consistency between examinations of different patients as well as between examinations of a single patient at different evaluation time points.

Significant reductions in inter-operator variance open opportunities to evaluate, model, and reduce the effects of individualized patient kinetics, allowing for further refinement of diagnostic CT acquisitions. While greater accuracy in the bolus tracking procedure increases the likelihood of reaching optimal phase timings, combining this pipeline with automated phase-identification algorithms can ensure that the optimal phase is successfully reached in a patient, regardless of anthropomorphic variation and hemodynamic status (24,25). Importantly, non-optimal portal venous phase acquisition timing occurs in one out of three patients in multicenter clinical trials, significantly altering tumor density measurements (26). The ability to accurately identify phase timings also allows for further refinement of diagnostic CT procedures, through standardizing iodine injection and uptake, and potentially reducing iodine dose. Finally, this pipeline may be useful tool for the standardization of novel dual-contrast imaging procedures, in which information of the first contrast is essential to accurately forecast the time point of maximal arterial enhancement by the second contrast agent (27).

There are limitations of our study. First, inter- and intra- operator variances contributed to a lack of accurate ground-truth labels for the locator scan positioning network. Revision of ground-truth labels, such as by taking the average of multiple CT technologist annotations for each sample (17), may provide more accurate training data for improved network performance. Second, our models did not consider the effects of patient heterogeneity. Collected CT patients varied in sex, age, cancer pathology, and medical state; thus, future studies evaluating the effects of inter-patient heterogeneity on model performance is critical determining the applicability of our model. Third, our study serves as a proof-of-concept of the benefits of automated bolus-tracking in contrast-enhanced CT and does not address patient outcomes. Positional consistency was used as a metric of the potential clinical benefit of adopting an automated approach; however, we did not assess the impact of slice localization offsets and segmentation differences on the final stratification of patients. Moving forward, clinical trials will effectively determine the effects of automatic selection suggestions on image quality and patient diagnosis. Finally, the incorporation of automated bolus tracking in larger studies that aim to advance CT evaluations will quantify the time factor improvements relative to the conventional workflow and fuel its integration into daily clinical routines.

In conclusion, we developed a machine learning pipeline to automate and standardize the locator scan positioning and ROI segmentation tasks in the bolus tracking workflow. By significantly reducing operator-related decisions, this method opens opportunities to evaluate, model, and reduce the effect of patient kinetics in contrast-enhanced CT exams.

Acknowledgments

Special thanks to Penn Medicine’s Lead CT Technologist Michael Colfer for expert-level labeling of locator scan positionings.

Funding: The study was supported by the National Institutes of Health (No. R01EB030494).

Footnote

Reporting Checklist: The authors have completed the TRIPOD reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-22-686/rc

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-22-686/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the institutional review board of the University of Pennsylvania and individual consent for this retrospective analysis was waived.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Cody DD, Dillon CM, Fisher TS, Liu X, McNitt-Gray MF, Patel V. AAPM Medical Physics Practice Guideline 1.b: CT protocol management and review practice guideline. J Appl Clin Med Phys 2021;22:4-10. [Crossref] [PubMed]

- Adibi A, Shahbazi A. Automatic bolus tracking versus fixed time-delay technique in biphasic multidetector computed tomography of the abdomen. Iran J Radiol 2014;11:e4617. [Crossref] [PubMed]

- Zreik M, van Hamersvelt RW, Wolterink JM, Leiner T, Viergever MA, Isgum I. A Recurrent CNN for Automatic Detection and Classification of Coronary Artery Plaque and Stenosis in Coronary CT Angiography. IEEE Trans Med Imaging 2019;38:1588-98. [Crossref] [PubMed]

- Irmak E. Multi-Classification of Brain Tumor MRI Images Using Deep Convolutional Neural Network with Fully Optimized Framework. Iran J Sci Technol Trans Electr Eng 2021;45:1015-36. [Crossref]

- Lee JH, Lee JM, Kim SJ, Baek JH, Yun SH, Kim KW, Han JK, Choi BI. Enhancement patterns of hepatocellular carcinomas on multiphasicmultidetector row CT: comparison with pathological differentiation. Br J Radiol 2012;85:e573-83. [Crossref] [PubMed]

- Bae KT, Seeck BA, Hildebolt CF, Tao C, Zhu F, Kanematsu M, Woodard PK. Contrast enhancement in cardiovascular MDCT: effect of body weight, height, body surface area, body mass index, and obesity. AJR Am J Roentgenol 2008;190:777-84. [Crossref] [PubMed]

- Bae KT, Tao C, Gürel S, Hong C, Zhu F, Gebke TA, Milite M, Hildebolt CF. Effect of patient weight and scanning duration on contrast enhancement during pulmonary multidetector CT angiography. Radiology 2007;242:582-9. [Crossref] [PubMed]

- Fukukura Y, Takumi K, Kamiyama T, Shindo T, Higashi R, Nakajo M. Pancreatic adenocarcinoma: a comparison of automatic bolus tracking and empirical scan delay. Abdom Imaging 2010;35:548-55. [Crossref] [PubMed]

- Kurokawa R, Maeda E, Mori H, Amemiya S, Sato J, Ino K, Torigoe R, Abe O. Effect of bolus tracking region-of-interest position within the descending aorta on luminal enhancement of coronary arteries in coronary computed tomography angiography. Medicine (Baltimore) 2019;98:e15538. [Crossref] [PubMed]

- McNitt-Gray MF, Bidaut LM, Armato SG, Meyer CR, Gavrielides MA, Fenimore C, McLennan G, Petrick N, Zhao B, Reeves AP, Beichel R, Kim HJ, Kinnard L. Computed tomography assessment of response to therapy: tumor volume change measurement, truth data, and error. Transl Oncol 2009;2:216-22. [Crossref] [PubMed]

- Sheikhbahaei S, Mena E, Yanamadala A, Reddy S, Solnes LB, Wachsmann J, Subramaniam RM. The Value of FDG PET/CT in Treatment Response Assessment, Follow-Up, and Surveillance of Lung Cancer. AJR Am J Roentgenol 2017;208:420-33. [Crossref] [PubMed]

- Hua CH, Shapira N, Merchant TE, Klahr P, Yagil Y. Accuracy of electron density, effective atomic number, and iodine concentration determination with a dual-layer dual-energy computed tomography system. Med Phys 2018;45:2486-97. [Crossref] [PubMed]

- Sellerer T, Noël PB, Patino M, Parakh A, Ehn S, Zeiter S, Holz JA, Hammel J, Fingerle AA, Pfeiffer F, Maintz D, Rummeny EJ, Muenzel D, Sahani DV. Dual-energy CT: a phantom comparison of different platforms for abdominal imaging. Eur Radiol 2018;28:2745-55. [Crossref] [PubMed]

- Zopfs D, Graffe J, Reimer RP, Schäfer S, Persigehl T, Maintz D, Borggrefe J, Haneder S, Lennartz S, Große Hokamp N. Quantitative distribution of iodinated contrast media in body computed tomography: data from a large reference cohort. Eur Radiol 2021;31:2340-8. [Crossref] [PubMed]

- Pham DL, Xu C, Prince JL. Current methods in medical image segmentation. Annu Rev Biomed Eng 2000;2:315-37. [Crossref] [PubMed]

- Belharbi S, Chatelain C, Hérault R, Adam S, Thureau S, Chastan M, Modzelewski R. Spotting L3 slice in CT scans using deep convolutional network and transfer learning. Comput Biol Med 2017;87:95-103. [Crossref] [PubMed]

- Kanavati F, Islam S, Aboagye EO, Rockall A. Automatic L3 slice detection in 3D CT images using fully-convolutional networks. arXiv:181109244. 2018 Nov 22.

- Dabiri S, Popuri K, Ma C, Chow V, Feliciano EMC, Caan BJ, Baracos VE, Beg MF. Deep learning method for localization and segmentation of abdominal CT. Comput Med Imaging Graph 2020;85:101776. [Crossref] [PubMed]

- Weiss K, Khoshgoftaar TM, Wang D. A survey of transfer learning. J Big Data 2016;3:9. [Crossref]

- Deng J, Dong W, Socher R, Li LJ, Kai Li, Li FF. ImageNet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition. Miami, FL: IEEE, 2009: 248-55.

- Simonyan K, Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv:14091556. 2015 Apr 10.

- He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, NV, USA: IEEE, 2016: 770-8.

- Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv:150504597. 2015 May 18.

10.1007/978-3-319-24574-4_28 10.1007/978-3-319-24574-4_28 - Ma J, Dercle L, Lichtenstein P, Wang D, Chen A, Zhu J, Piessevaux H, Zhao J, Schwartz LH, Lu L, Zhao B. Automated Identification of Optimal Portal Venous Phase Timing with Convolutional Neural Networks. Acad Radiol 2020;27:e10-8. [Crossref] [PubMed]

- Silverman PM, Brown B, Wray H, Fox SH, Cooper C, Roberts S, Zeman RK. Optimal contrast enhancement of the liver using helical (spiral) CT: value of SmartPrep. AJR Am J Roentgenol 1995;164:1169-71. [Crossref] [PubMed]

- Dercle L, Lu L, Lichtenstein P, Yang H, Wang D, Zhu J, Wu F, Piessevaux H, Schwartz LH, Zhao B. Impact of Variability in Portal Venous Phase Acquisition Timing in Tumor Density Measurement and Treatment Response Assessment: Metastatic Colorectal Cancer as a Paradigm. JCO Clin Cancer Inform 2017;1:1-8. [Crossref] [PubMed]

- Muenzel D, Daerr H, Proksa R, Fingerle AA, Kopp FK, Douek P, Herzen J, Pfeiffer F, Rummeny EJ, Noël PB. Simultaneous dual-contrast multi-phase liver imaging using spectral photon-counting computed tomography: a proof-of-concept study. Eur Radiol Exp 2017;1:25. [Crossref] [PubMed]