Automatic evaluation of endometrial receptivity in three-dimensional transvaginal ultrasound images based on 3D U-Net segmentation

Introduction

The endometrium, including the superior inner cortex and mucosa, is essential for fertility and reproductive health. Clinically, endometrial thickness is essential for diagnosing endometrial-related diseases (1-6). In addition, endometrial receptivity reflects the receptivity of the endometrium to embryos, which is often evaluated by endometrial thickness in the clinic (7-9). If the endometrium is too thin, it is not conducive to embryonic implantation and development.

Ultrasound imaging and magnetic resonance imaging (MRI) are the main ways to diagnose uterine and endometrial-related diseases (10-12) To date, three-dimensional (3D) ultrasound has been widely used in clinical medicine to provide continuous anatomical images for diagnosis. A 3D ultrasound can enable doctors to observe the endometrium’s shape, echo, and thickness more intuitively, and it is of great value in diagnosing uterine diseases (13-16). Furthermore, 3D ultrasound imaging facilitates obtainment of the complete information of the uterus and endometrium in real-time from three different angles—the coronal plane, sagittal plane, and transverse plane.

In ultrasound-based endometrial disease screening, the quantitative evaluation result of endometrial diseases is generally achieved by measuring the thickness of the endometrium. In clinical medicine, the acceptable error range of endometrial thickness measurement is ±2 mm (17,18). However, manual measurement of endometrial thickness is based on personal experience in clinical diagnosis, and subjective factors may affect the measurement position. Furthermore, some endometrial boundaries are challenging to identify because of the resolution and noise of the image. In addition, the irregular shape of the endometrium increases the difficulty of manual measurement by doctors. Therefore, the current diagnostic methods may have issues such as low accuracy and poor repeatability of the endometrium measurement results.

So far, the research on endometrial segmentation in ultrasound images has achieved some valuable results. Yang et al. (19) proposed an endometrium segmentation algorithm in uterus contraction, using a recursive model and multi-threshold technology to extract the uterus in motion and automatically segment the endometrium to provide evaluation indexes for the treatment of infertility. Thampi et al. (20) used the level set method to segment the endometrium in two-dimensional (2D) ultrasound images of endometrial cancer. The algorithm first performed speckle reducing anisotropic diffusion (SRAD) filtering on the image, then selected the initial contour and realized the segmentation of the region of interest (ROI) using the level set. However, the segmentation result of this method will depend on the seed point selection of the level set.

In recent years, a convolutional neural network (CNN) has been widely applied to the processing and analysis of medical images and has shown excellent performance in medical image segmentation (21-23). The U-Net network is a typical CNN model for medical image segmentation. With the development of technology, researchers began to extend 2D convolution to 3D convolution to introduce the spatial information of data (24). The 3D U-Net was first developed for 3D medical image segmentation and achieved good research results (25). Chen et al. (26) proposed a 3D U-Net network based on the channel attention for multi-modal brain tumor segmentation. Mourya et al. (27) proposed the cascaded 3D U-Net to realize automatic segmentation of the liver and tumor in computed tomography (CT) images, and its Dice for liver segmentation reached 95%.

The CNN was also applied to endometrial segmentation. Hu et al. (28) used the visual geometry group (VGG) network to realize automatic endometrium segmentation in 2D ultrasound images and calculated the maximum endometrial thickness perpendicular to the central axis, which was extracted through the medial axis transformation (MAT) (29,30). Singhal et al. (31) proposed an endometrium segmentation algorithm based on fully convolutional networks (FCN) and level set and realized the automatic measurement of endometrial thickness. The algorithm performed the 2D segmentation and thickness measurement of endometrium through a hybrid variational curve propagation model, namely, the deep learning snake (DLS) segmentation model. Park et al. (32) employed the critical point discriminator to train the endometrium segmentation network to learn the shape distribution of the endometrium. As a result, the problem of edge blur and uneven texture of 2D ultrasound images was solved by adversarial learning that could realize the endometrium’s automatic segmentation.

The above endometrium segmentation studies were all based on the sagittal plane of 2D ultrasound images. Although 3D images were used by Singhal et al. (31), the network segmentation training was still carried out for 2D images slice by slice. However, compared with 2D, 3D ultrasound provides complete structural information. Furthermore, the accuracy of endometrium segmentation determines the accuracy of endometrial thickness measurements. Therefore, based on 3D ultrasound images, this paper presents an automatic endometrium segmentation using a 3D U-Net (33). Then, the method obtains the measurement of endometrium thickness. We present the following article in accordance with the STARD reporting checklist (available at https://qims.amegroups.com/article/view/10.21037/qims-21-1155/rc).

Methods

Materials

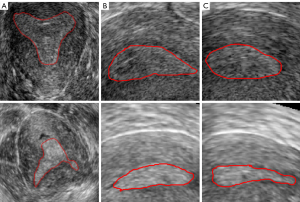

Constrained by the time of the data collection and retention in the ultrasound instruments, we performed staged data collection to obtain sufficient data. In each stage of data collection, all ultrasound images were selected. Therefore, a total of 113 three-dimensional transvaginal ultrasound (3D TVUS) images from October 2019 to May 2021 (October–December 2019, March–May 2020, October–December, and March–May 2021) were collected retrospectively in this study. The ages of all patients ranged from 29 to 41. For the acquired data, we excluded patient data that were duplicated within one month, poor quality images, and images with indistinguishable endometrial areas. After data screening, 85 cases were selected for this study, including 75 cases with endometrial adhesion and 10 cases without endometrial adhesion. The data selection process is shown in Figure 1. The images were collected by Voluson E8 three-dimensional ultrasonic equipment and the transducer RIC5-9-D (center frequency 6.6 MHZ, bandwidth 5 MHZ-9MHZ; GE Healthcare, Chicago, IL, USA). The slice spacing of the image data was between 0.22 and 0.31 mm, and the resolution was between 0.2933 and 4.413 pixels per mm. The three-section images of the endometrium with or without adhesion are shown in Figure 2, the coronal section of the endometrium in Figure 2A, the transverse section in Figure 2B, and the sagittal section in Figure 2C. The first row is normal endometrium, the second is the endometrium with adhesion, and the red line shows the endometrial boundary in each section.

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the Institutional Review Board of Shengjing Hospital of China Medical University, and individual consent for this retrospective analysis was waived.

Study methods

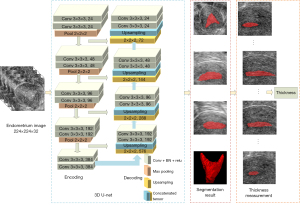

This paper used 3D U-Net to segment endometrium from 3D ultrasound images. Two experienced doctors manually annotated the endometrium on the coronal section by ITK-SNAP (http://www.itksnap.org/pmwiki/pmwiki.php) and measured the endometrial thickness as the gold standard for evaluation. In the case of disagreement, another more senior clinical physician was asked to analyze and confirm the final annotation results. The overall research method is shown in Figure 3. The 3D U-Net uses 3D convolution instead of 2D (34). With a 3D image as input, the structure information between slices of the image could be better extracted in the encoder part of the network, and the ROI could be more accurately located in the decoder part, which realized more accurate segmentation of the endometrium. Then, the endometrial thickness could be automatically measured according to the sagittal endometrium segmentation results, and the receptivity could be evaluated with the thickness.

Image preprocessing

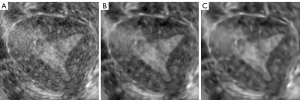

The noise of ultrasound images has a significant influence on model learning. Therefore, we performed image filtering on the original images, including block-matching and 3D filtering (BM3D) and the SRAD (35-37). As a result, the noise of the image was removed, while the edge information was preserved as much as possible. These two kinds of filtered images were combined with the original image to construct a three-channel image. Then, the augmented images were sent to 3D U-Net to realize endometrium segmentation. The image process after two filtering processes are shown in Figure 4, the original image in Figure 4A, the image after SRAD filtering in Figure 4B, and the image after BM3D noise removal in Figure 4C.

To achieve a better performance during deep learning model training, we chose two methods for data augmentation. One was to augment the training data by rotating, scaling, translating, and mirroring. The other method was to randomly extract patches from the ROI of the data to increase the training samples (38,39). By trying different patch sizes in training, we finally determined to extract ten patches with the size of 96×96×32 for each case as the input of network training. When testing, the same size patches were extracted by sliding windows, and there are 50% overlapping areas between patches. When synthesizing the results, the mean value of the overlapping areas was calculated, and then the prediction results were back to 224×224×32 images.

3D U-Net segmentation

We used a 3D U-Net to segment the endometrium. Since most of the research on endometrium segmentation has been carried out on 2D ultrasound images, we conducted a comparative experiment of 2D U-Net and 3D U-Net segmentation. Among the 85 cases of data, 15 cases (13 with adhesion and 2 without) were randomly selected as the testing set, and the other 70 cases were used as the training set. All experiments in this paper were carried out on NVIDIA GTX 1080 graphics card (NVIDIA, Santa Clara, CA, USA).

In the 3D U-Net segmentation experiment, the image size sent into the network training was 224×224×32. When training, the Adam optimizer was used for optimization with momentum parameters of beta1 =0.9, beta2 =0.999, and epsilon =1e-8 (40). The initial learning rate was 10−3. During the training period, the learning rate decayed according to Eq. [1]. The batch size was 1 due to graphics processing unit (GPU) limitations, epochs were 800, and the Dice loss was used as the loss function. Sigmoid was selected as the activation function, and a threshold of 0.5 was used to obtain the final segmentation mask from the output of the 3D U-Net. In the experiment, the original data (OD) set, the augmented OD set, and the augmented filtered data set were trained, respectively, and the corresponding segmentation model and segmentation results were obtained.

Where lr represents the current learning rate, initiallr represents the initial learning rate, decayrate represents the decay rate, globalstep represents the current training round, and decaysteps represents the decay period.

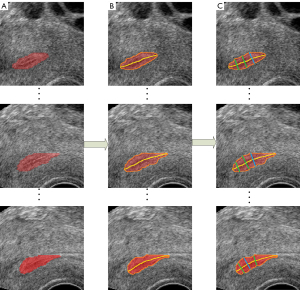

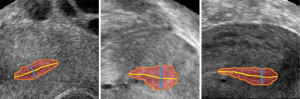

Automatic measurement of the endometrium thickness

After obtaining the segmentation results of the endometrium through the 3D U-Net, the automatic measurement of endometrium thickness was conducted. Although the original image in this study was the coronal image, the sagittal image can better reflect the thickness of the endometrium. Therefore, the sagittal endometrium segmentation results were used to measure and evaluate thickness. First, the centerline of the segmented endometrium was extracted through the MAT. Then, the distances perpendicular to the centerline were calculated according to the endometrium contour. Furthermore, the maximum distance was taken as the endometrium thickness in this slice, consistent with the evaluation method in clinical medicine. The results of automatic measurement of endometrium thickness according to MAT is shown in Figure 5, the images of different sagittal section images of the endometrium in Figure 5A, the corresponding endometrium contour and centerline obtained by MAT in Figure 5B, and the corresponding thickness measurements in Figure 5C. The code for endometrial segmentation, evaluation, and endometrial thickness measurement can be found on GitHub (https://github.com/wx-hub/package.git).

Evaluation method

The Dice similarity coefficient (DSC), Jaccard, sensitivity, and 95th percentile Hausdorff distance (HD95) were used as the parameters for the segmentation evaluation. The DSC, Jaccard, sensitivity, and HD95 were calculated as follows:

where TP, FP, and FN represent the number of true positive, false positive, and false negative pixels, and HD represents the maximum surface distance between the predicted mask map Pi of pixel i and the corresponding ground truth Gi manually marked by the expert. The Kth ranking distance was used to suppress outliers.

Results

Endometrium segmentation results

Segmentation of different network

For 2D U-Net, we selected the original coronal image containing the ROI as the input for network training. According to the case division of the training and testing set, we obtained 2,000 training images and 480 testing images. During the training, an Adam optimizer was used for optimization. The initial learning rate was 10−4, epochs were 500, and the binary cross-entropy was used as the loss function. Different batch sizes were tested, and the final model selected the batch that had the best segmentation results.

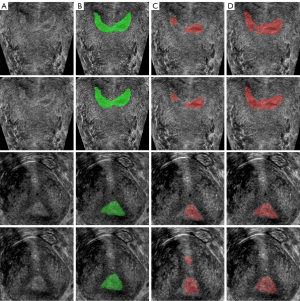

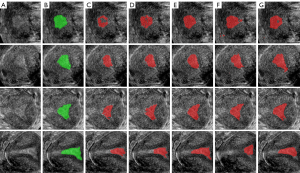

The segmentation results of 2D U-Net and 3D U-Net are shown in Figure 6, the original image in Figure 6A, the label of endometrium in Figure 6B, and the segmentation result of 2D and 3D U-net in Figure 6C,6D. Table 1 shows the evaluation indexes of the two network segmentation models. The segmentation result of 2D U-Net had under-segmentation or over-segmentation, and its DSC was 64.04%, while the segmentation result of 3D U-Net was significantly improved, and its DSC reached 85.80%.

Table 1

| Index | 2D U-Net | 3D U-Net |

|---|---|---|

| DSC (%) | 64.04 | 85.80 |

| Jaccard (%) | 50.43 | 75.33 |

2D U-net, two-dimensional U-shape network; 3D, three-dimensional; DSC, dice similarity coefficient.

Segmentation of different preprocessing

Based on the 3D U-Net, we evaluated the segmentation results of the OD, the augmented data (AD) obtained by the traditional augmentation method, the enhanced augmented data (EAD) obtained after image filtering, the patched data (PD) of the original image, and the enhanced patched data (EPD) obtained after image filtering. The final results are shown in Table 2. The DSC of the segmentation results obtained from OD and AD were 85.80% and 87.20%, respectively. On EAD, the segmentation result achieved the DSC of 90.83%, which was 5.03% and 3.63% higher than before. In the patch-based 3D U-Net, the segmentation result of the model based on PD was 81.66%, and that based on EPD was 84.30%, with an increase of 2.64%. Overall, image filtering and the 3-channel integration with the enhanced images significantly improved the segmentation results. Compared with the patch-based method, the traditional augmentation method achieved better segmentation performance, and the DSC improved by 6.53%.

Table 2

| Index | OD | AD | EAD | PD | EPD |

|---|---|---|---|---|---|

| DSC (%) | 85.80 | 87.20 | 90.83 | 81.66 | 84.30 |

| Jaccard (%) | 75.33 | 78.20 | 83.35 | 70.00 | 73.30 |

| Sensitivity (%) | 83.71 | 85.05 | 90.85 | 75.39 | 79.89 |

| HD95 (mm) | 18.01 | 14.77 | 12.75 | 23.75 | 22.40 |

3D U-Net, three-dimensional U-shape network; OD, original data; AD, augmented data; EAD, enhanced augmented data; PD, patched data; EPD, enhanced patched data; DSC, dice similarity coefficient; HD95, 95th percentile Hausdorff distance.

The segmentation results from different training datasets are shown in Figure 7, the original images in Figure 7A, the label of the endometrium in Figure 7B, the segmentation results training from OD, AD and EAD in Figure 7C, 7D,7E, the segmentation results trained from PD and EPD in Figure 7F,7G. The segmentation model training from EAD achieved the best endometrium segmentation result.

Endometrium thickness measurements

In this study, the endometrium thickness measurements were performed on the sagittal images of 15 cases in the testing set based on the segmentation results from EAD training model. At the same time, two experienced doctors were asked to manually measure the endometrium thickness of these 15 cases, which was regarded as the gold standard for evaluation. Mean absolute error (MAE), root mean square error (RMSE), and standard deviation (STD) were selected to evaluate the measurement error. The results are shown in Table 3. The calculation formulae of MAE, RMSE, and STD are as follows:

Table 3

| Index | MAE (mm) | RMSE (mm) | STD (mm) | <±2 mm (%) |

|---|---|---|---|---|

| Proposed method | 0.75 | 1.07 | 0.80 | 94.20 |

MAE, mean absolute error; RMSE, root mean square error; STD, standard deviation.

where and yi represent the true value and predicted value, and represents the mean value of yi.

The allowable measurement error range of endometrium thickness in clinical medicine is ±2 mm. The experimental results show that 94.20% of the measurement results were within the error range based on the measurement method proposed in this paper. Figure 8 shows the measurement results of the endometrium thickness based on 3D U-Net segmentation, in which the red region shows the segmentation result of the endometrium, the orange line is the contour of the endometrium, the yellow line is the centerline of the endometrium, and the green line shows the maximum thickness of the endometrium.

Discussion

Most of the existing endometrium segmentation studies were conducted on 2D images, including using traditional image segmentation methods and deep learning methods. In this study, the segmentation results obtained by 2D U-Net were unsatisfactory, although the images were preprocessed and augmented. In contrast, the segmentation accuracy improved when using the 3D U-Net, and the DSC reached 90.83%, which is 26.79% higher than that of the 2D U-Net. The reason for the improvement is that the signal-to-noise ratio of the ultrasound image is relatively poor, and the morphology of the endometrium is irregular. In addition, 2D segmentation ignores the information between the image slices, so 3D segmentation results are significantly better than those of 2D segmentation. Finally, we compared the proposed method with the existing endometrium segmentation methods, as shown in Table 4.

Table 4

| Study | Method | Case number | Image number | Input image size | Dice (%) | Jaccard (%) | Thickness <±2 mm (%) |

|---|---|---|---|---|---|---|---|

| Hu et al. (28) | 2D VGG-based U-Net | 91 | 1,031 | 192×256 | 85.30 | – | 87.50 |

| Singhal et al. (31) | 2D deep learned snake | 59 | 330 | 200×200 | – | – | 87.00 |

| Park et al. (32) | 2D segmentation framework with a discriminator | – | 3,372 | 256×320 | 82.67 | 70.46 | – |

| This study | 3D U-Net | 85 | 2,480 | 224×224×32 | 90.83 | 83.35 | 94.20 |

2D VGG-based U-Net, two-dimensional visual geometry group based on U-shape network; 3D U-Net, three-dimensional U-shape network.

When using 3D U-Net for endometrium segmentation, we compared the traditional data augmentation and patch-based methods. The experimental results showed that the DSC of the traditional data augmentation method (EAD) was 90.83%, while the patch-based method (EPD) was only 84.30%. The analysis showed that the patch-based augmentation method had an excellent segmentation effect for normal endometrium. However, for endometrium with adhesion, although the extraction of the patches can increase the sample size, the patch only contains local information, which leads to missing or having difficulty distinguishing the local edge and results in incomplete segmentation or over-segmentation.

In addition, we used a 3-fold cross-validation method to verify the optimal model based on EAD as the final uterine image preprocessing method and 3D U-Net as the final uterine segmentation network. First, 18% of data (15 cases) were randomly selected as the test set. Then, the remaining data were randomly divided into three parts of the same number, two of which were used for training and the other for verification. This process was carried out in turn, and then we obtained the optimal model, which was trained with data other than the test set to obtain the final segmentation model. Finally, the segmentation performance of the model was evaluated on the test set, and the results achieved DSC coefficient of 89.1% and STD value of 0.0296. Compared with the previous test results (DSC coefficient of 90.83%, STD value of 0.0325), the Dice coefficient of the optimal model was slightly reduced, but the STD value was improved. The segmentation accuracy of the optimal model was guaranteed, and the stability of the test results were improved.

An essential purpose of endometrium segmentation is to measure the endometrium thickness. Therefore, the segmentation results directly affect the accuracy of the thickness measurement. This study compared the automatic measurement of endometrium thickness based on different segmentation results. The experimental results showed that the accuracy of endometrium thickness measurement was highest used 3D U-Net segmentation training from EAD, 94.20% of the measurement results are within the error range, and only a few data have relatively large thickness measurement errors due to their blurred endometrium boundary and severe endometrial damage. We compared the proposed method with the existing endometrium thickness measurement methods, as shown in Table 4.

In this study, the endometrium thickness was calculated on sagittal images, which is consistent with the measurement method of doctors. The method was applied in the clinical process for experimental testing. Next, we will try to measure the thickness of the endometrium based on 3D segmentation results to provide further assistance to physicians. In addition, there were some poor-quality images encountered during the data collection process, which are shown in Figure 9. For these poor-quality images, an experienced doctor also has difficulty providing representative criteria for segmentation. Therefore, the poor-quality images were excluded from the datasets. The segmentation of the poor-quality images will be discussed with doctors subsequently. Moreover, the experimental datasets in this paper were obtained from a single source with limited access to data. In future work, we can obtain more data samples to train and test the model.

Conclusions

This paper presents an automatic endometrium segmentation method based on 3D U-Net and demonstrates an endometrial thickness measurement method. The experimental results show that the segmentation model training from traditional data augmentation and image filtering processing (EAD) achieves the best performance, reaching DSC of 90.83%, which is higher than patch-based 3D U-Net and 2D U-Net. Based on the segmentation results, the MAE and RMSE of the automatic endometrium thickness measurement were 0.75 and 1.07 mm. A total of 94.20% of the measurement results in the testing dataset were within the allowable error range of clinical medicine. Therefore, the proposed method in this paper can effectively help doctors in their diagnostic decision making.

Acknowledgments

Funding: This work was supported by the National Natural Science Foundation of China (NSFC) under grant number 61873257 and the Guizhou Province Science and Technology Project under grant number Qiankehezhicheng [2019] 2794.

Footnote

Reporting Checklist: The authors have completed the STARD reporting checklist. Available at https://qims.amegroups.com/article/view/10.21037/qims-21-1155/rc

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://qims.amegroups.com/article/view/10.21037/qims-21-1155/coif). The authors report that this work was supported by the National Natural Science Foundation of China (NSFC) under grant number 61873257 and the Guizhou Province Science and Technology Project under grant number Qiankehezhicheng [2019] 2794. The authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the Institutional Review Board of Shengjing Hospital of China Medical University, and individual consent for this retrospective analysis was waived.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Stachowiak G, Zając A, Pertynska-Marczewska M, Stetkiewicz T. 2D/3D ultrasonography for endometrial evaluation in a cohort of 118 postmenopausal women with abnormal uterine bleedings. Ginekol Pol 2016;87:787-92. [Crossref] [PubMed]

- Wolman I, Jaffa AJ, Sagi J, Hartoov J, Amster R, David MP. Transvaginal ultrasonographic measurements of endometrial thickness: a reproducibility study. J Clin Ultrasound 1996;24:351-4. [Crossref] [PubMed]

- Yang X, Hong D, Yu L, Ma H, Na L. The application of ultrasound monitoring on follicle and endometrium in infertility treatment. Guangzhou Med J 2013;44:17-20.

- Mariani LL, Mancarella M, Fuso L, Baino S, Biglia N, Menato G. Endometrial thickness in the evaluation of clinical response to medical treatment for deep infiltrating endometriosis: a retrospective study. Arch Gynecol Obstet 2021;303:161-8. [Crossref] [PubMed]

- Long B, Clarke MA, Morillo ADM, Wentzensen N, Bakkum-Gamez JN. Ultrasound detection of endometrial cancer in women with postmenopausal bleeding: Systematic review and meta-analysis. Gynecol Oncol 2020;157:624-33. [Crossref] [PubMed]

- Nama A, Kochar S, Kumar A, Suthar N, Poonia L. Significance of endometrial thickness on transvaginal ultrasonography in abnormal uterine bleeding. J Curr Res Sci Med 2020;6:109-13.

- Cui YF, Liu Y, Zhang J, Yang XK, Yan-Min MA, Wang SY, Jia CW. The investigation of the correlation between endometrial thickness and pregnancy outcomes in IVF- ET. Chinese Journal of Birth Health & Heredity 2014;22:113-5.

- Eftekhar M, Mehrjardi SZ, Molaei B, Taheri F, Mangoli E. The correlation between endometrial thickness and pregnancy outcomes in fresh ART cycles with different age groups: a retrospective study. Middle East Fertil Soc J 2019;24:291-8.

- Zhao J, Zhang Q, Wang Y, Li Y. Endometrial pattern, thickness and growth in predicting pregnancy outcome following 3319 IVF cycle. Reprod Biomed Online 2014;29:291-8. [Crossref] [PubMed]

- Ohl J, Bettahar-Lebugle K, Rongières C, Nisand I. Role of ultrasonography in diagnosing female infertility. Gynecol Obstet Fertil 2000;28:324-39. [PubMed]

- Nalaboff KM, Pellerito JS, Ben-Levi E. Imaging the endometrium: disease and normal variants. Radiographics 2001;21:1409-24. [Crossref] [PubMed]

- Koyama T, Tamai K, Togashi K. Staging of carcinoma of the uterine cervix and endometrium. Eur Radiol 2007;17:2009-19. [Crossref] [PubMed]

- Xing LI, Sang JZ, Bai LJ, Wei-Yang LV, Hui LI, Liang X, Ultrasound DO. Application Value of Comparison Transvaginal Three-Dimensional Energy Doppler Ultrasound and Ultrasound Elastography in the Early Diagnosis of Cervical Cancer. China Medical Devices 2017;32:57-60,82.

- Yang CH, Chung PC, Tsai YC, Chuang LY. Endometrium characterization in connection with uterine contraction. IEEE Trans Nucl Sci 2001;48:1435-9. [Crossref]

- Apirakviriya C, Rungruxsirivorn T, Phupong V, Wisawasukmongchol W. Diagnostic accuracy of 3D-transvaginal ultrasound in detecting uterine cavity abnormalities in infertile patients as compared with hysteroscopy. Eur J Obstet Gynecol Reprod Biol 2016;200:24-8. [Crossref] [PubMed]

- Bjoern O, Sevald B, Anders Herman T, Klaus PS, Sturla HE-N. editors. 3D transvaginal ultrasound imaging for identification of endometrial abnormality. Proceedings of the SPIE; 1995.

- Epstein E, Valentin L. Rebleeding and endometrial growth in women with postmenopausal bleeding and endometrial thickness < 5 mm managed by dilatation and curettage or ultrasound follow-up: a randomized controlled study. Ultrasound Obstet Gynecol 2001;18:499-504. [Crossref] [PubMed]

- Epstein E, Valentin L. Intraobserver and interobserver reproducibility of ultrasound measurements of endometrial thickness in postmenopausal women. Ultrasound Obstet Gynecol 2002;20:486-91. [Crossref] [PubMed]

- Yang CH, Chung PC, Tsai YC, Chuang LY. Estimation of the endometrium in ultrasound images using a recursive model. 2000 IEEE Nuclear Science Symposium Conference Record (Cat. No.00CH37149); 15-20 Oct 2000.

- Thampi LL, Paul V. Application of compression after the detection of endometrial carcinoma imaging: Future scopes. 2017 International conference of Electronics, Communication and Aerospace Technology (ICECA); 1 Apr 2017.

- Lai M. Deep Learning for Medical Image Segmentation. arXiv preprint 2015. Available online: https://arxiv.org/abs/1505.02000.

- Liu S, Wang Y, Yang X, Lei B, Liu L, Li SX, Ni D, Wang T. Deep Learning in Medical Ultrasound Analysis: A Review. Engineering 2019;5:261-75. [Crossref]

- Lin H, Xiao H, Dong L, Teo KB, Zou W, Cai J, Li T. Deep learning for automatic target volume segmentation in radiation therapy: a review. Quant Imaging Med Surg 2021;11:4847-58. [Crossref] [PubMed]

- Singh SP, Wang L, Gupta S, Goli H, Padmanabhan P, Gulyás B. 3D Deep Learning on Medical Images: A Review. Sensors (Basel) 2020;20:5097. [Crossref] [PubMed]

- Du G, Cao X, Liang J, Chen X, Zhan Y. Medical Image Segmentation based on U-Net: A Review. J Imaging Sci Techn 2020;64:1-12. [Crossref]

- Chen SL, Hu GH, Sun J. Medical Image Segmentation Based on 3D U-net. 2020 19th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES); 16-19 Oct 2020.

- Mourya GK, Bhatia D, Gogoi M, Handique A. CT Guided Diagnosis: Cascaded U-Net for 3D Segmentation of Liver and Tumor. IOP Conference Series: Materials Science and Engineering 2021. doi:

10.1088/1757-899X/1128/1/012049 .10.1088/1757-899X/1128/1/012049 - Hu SY, Xu H, Li Q, Telfer BA, Brattain LJ, Samir AE. Deep Learning-Based Automatic Endometrium Segmentation and Thickness Measurement for 2D Transvaginal Ultrasound. Annu Int Conf IEEE Eng Med Biol Soc 2019;2019:993-7.

- Blum H. A transformation for extracting new descriptors of shape. Models for the Perception of Speech and Visual Form 1967;4:362-80.

- Katz RA, Pizer SM. Untangling the Blum Medial Axis Transform. Int J Comput Vision 2003;55:139-53. [Crossref]

- Singhal N, Mukherjee S, Perrey C. Automated assessment of endometrium from transvaginal ultrasound using Deep Learned Snake. 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017); 18-21 April 2017.

- Park H, Lee HJ, Kim HG, Ro YM, Shin D, Lee SR, Kim SH, Kong M. Endometrium segmentation on transvaginal ultrasound image using key-point discriminator. Med Phys 2019;46:3974-84. [Crossref] [PubMed]

- Çiçek Ö, Abdulkadir A, Lienkamp SS, Brox T, Ronneberger O. editors. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. International Conference on Medical Image Computing and Computer-Assisted Intervention; 2016: Springer.

- Ronneberger O, Fischer P, Brox T. editors. U-Net: Convolutional Networks for Biomedical Image Segmentation. International Conference on Medical Image Computing and Computer-Assisted Intervention; 2015: Springer.

- Dabov K, Foi A, Katkovnik V, Egiazarian K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans Image Process 2007;16:2080-95. [Crossref] [PubMed]

- Qian C, Wu D. Image denoising by bounded block matching and 3D filtering. Signal Process 2010;90:2778-83. [Crossref]

- Santos LG, de Godoy CMG, de Jesus Prates L, Coelho RC. editors. Comparative Evaluation of Anisotropic Filters Used to Reduce Speckle in Ultrasound Images. Latin American Conference on Biomedical Engineering; 2019: Springer.

- Alkinani MH, El-Sakka MR. Patch-based models and algorithms for image denoising: a comparative review between patch-based images denoising methods for additive noise reduction. EURASIP J Image Video Process 2017;2017:58. [Crossref] [PubMed]

- Hou L, Samaras D, Kurc TM, Gao Y, Davis JE, Saltz JH. Patch-based Convolutional Neural Network for Whole Slide Tissue Image Classification. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit 2016;2016:2424-33. [Crossref] [PubMed]

- Kingma DP, Ba J. Adam: A Method for Stochastic Optimization. International Conference on Learning Representations 2015. https://arxiv.org/abs/1412.6980